Why Deleting Old Low Quality Articles Can Improve Rankings

For about the last decade, Google has prized one thing about all else on a website: quality. Websites with high-quality pages perform better in the search results than sites with middling or low-quality content. Sites with more quality content perform better than sites with less content. That's just the way it goes.

With this in mind, some people feel that they need to go back and purge all the old content on their blog before they can start digging into new blogging efforts. Other people feel like old content is basically neutral; not worth anything positive, but not negative either.

The truth, as always, lies somewhere in the middle. It's entirely possible that you have dozens, hundreds, or even thousands of pages on your site that are actively working against you, but they might not be what you think.

Why Content Holds You Back

Google's algorithm prizes quality and the goalposts for that quality have been on the move for years. Google's Panda update in 2011 was the start, and it was a massive paradigm shift. Since then, standards – and competition – have only gotten higher.

Modern content that is too short, that lacks coverage on the topic it purports to cover, that fails to focus on a keyword, or that otherwise just kind of sucks can be holding back your site. Google assigns it an actual slightly negative value, depending on how poorly it meets their guidelines. Often they are shown in a manual action, particularly for link penalties and thin content-based penalties, but not always.

When content is worth negative value, it's actually better for your site to remove it than it is to leave it. This is why sites with old blogs, particularly from before 2011, need to do something.

What can you do? A site audit. Here's how.

Step 1: Check the Google Search Console

The first thing you want to do is log into your Google Search Console account. This is the account you (probably) set up when you started the site, to hook yourself up with Google Analytics and get a host of other benefits. Even if you don't use it regularly, adding yourself to the Search Console was a common piece of advice to improve page indexation, so a lot of people set it up and forgot about it.

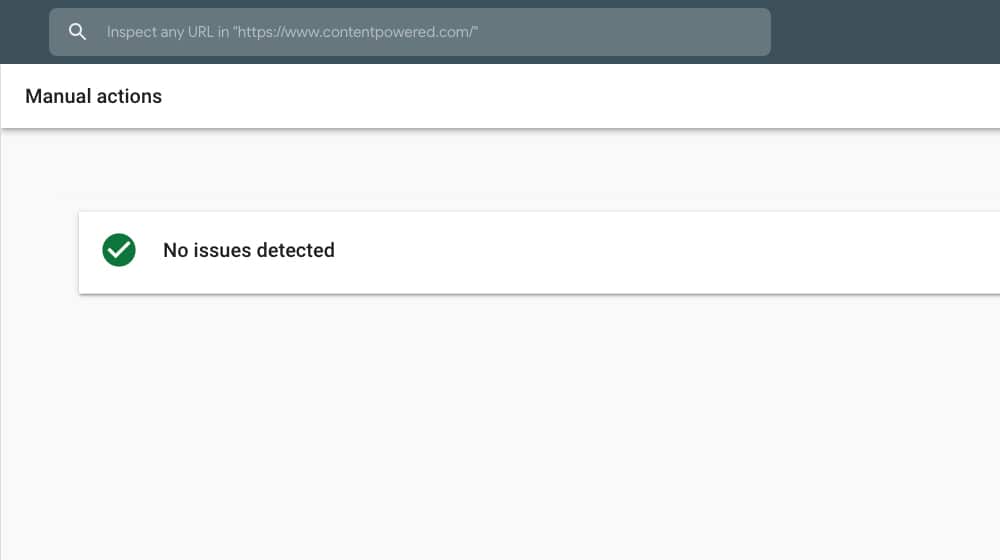

In the Search Console, the first thing you want to check to see is if you have any penalties lurking on your domain. You want to find something called the "manual actions report", which will show you any manual penalties Google has placed against you.

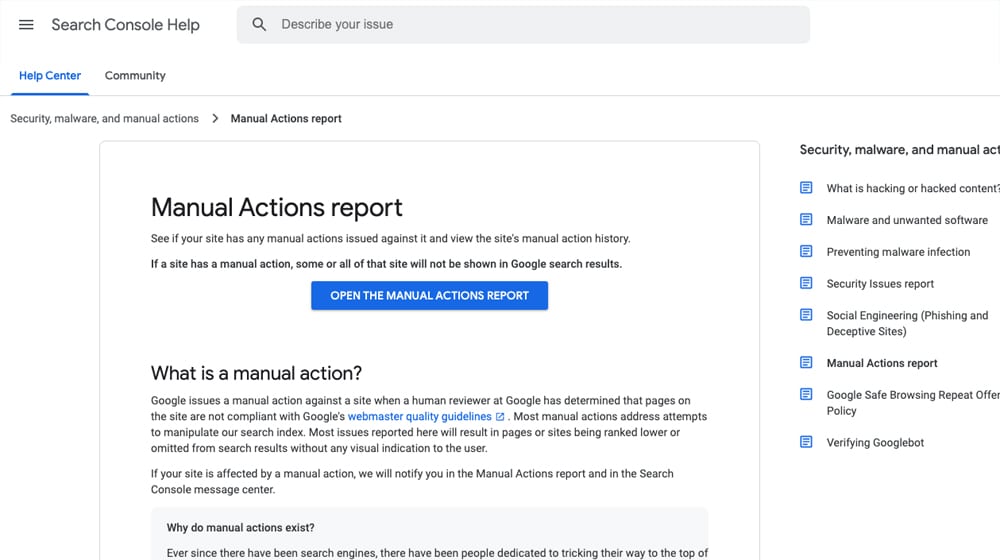

There are two types of Google "penalty" out there. Manual actions are one such type. Manual actions are cases where your site is doing something that's clearly against a Google policy, but which, if corrected, will not be held against you. So, for example, if there are a bunch of unnatural links on your site pointing to spam sites, that will cause a penalty, but if you remove those links, the penalty will be removed.

The other type of Google "penalty" is just the cost of having a poor site. If you're violating a tenet of the algorithm, like having a bunch of thin content pages, the algorithm will push down your search ranking. This isn't actually a penalty, but rather it's just how the algorithm ranks your site. If you fix the issue, your rank can improve, but it may take time to dig yourself out of the hole you've been in. People simply call these penalties because they can hit your site suddenly when Google updates their algorithm, similar to how a manual action can be sudden.

You won't see algorithmic penalties in the search console reports. You will, however, be able to see manual actions. What kind of manual actions exist?

- User-generated spam. This means you have a lot of user spam on your site, such as on a web forum or on your blog comments.

- Spammy free host. If you're using a free web host that throws their own links and ads on your site, that will penalize you.

- Structured data issue. Generally, this means you're trying to use structured data tags on the wrong kind of data or on pages that don't have much data at all, in an attempt to gain the benefits of using structured data in the first place.

- Unnatural links to your site. If you have a lot of bad, spammy links pointing at your site, you will need to audit and disavow them.

- Unnatural links from your site. Bad links from your site pointing at another might indicate your site looks spammy, or you may have been compromised and someone put them in your pages. You'll need to audit and remove the bad links.

- Thin content. This includes pages that are very short and have no real value to them. This includes things like auto-generated content, thin affiliate pages, and doorway pages.

- Cloaking. Any time you're trying to use scripts or redirects to hide something from Google, while still indexing and getting value from the page, it can trigger this penalty.

- Pure spam. Obviously, spam pages, stolen content, and other issues cause a penalty.

- Cloaked images. Showing Google one image but your users another is worth a penalty.

- Hidden text or keyword stuffing. Hiding text in a non-SEO-friendly way (like in hidden DIVs) can be damaging.

- AMP content mismatch. If you're using AMP pages, but the AMP version and the regular version of your page serve different content, it's a problem.

- Sneaky mobile redirects. Trying to send mobile users to a page that Googlebot can't follow is a penalty.

You can read more about all of these, as well as manual actions in general, on this page.

If you have any manual actions on your page, the manual actions report in the Google Search Console will tell you. It will also tell you what pages are causing it (or some of them, if it affects hundreds of pages), and what steps you can take to fix the problem.

Clearing out manual actions is the first step to a good site audit, and should be done before anything else. In some cases, you'll be able to decide whether or not to just delete the pages and be done with it, but sometimes you want to salvage the pages. More on that later.

Step 2: Check the Number of Indexed Pages

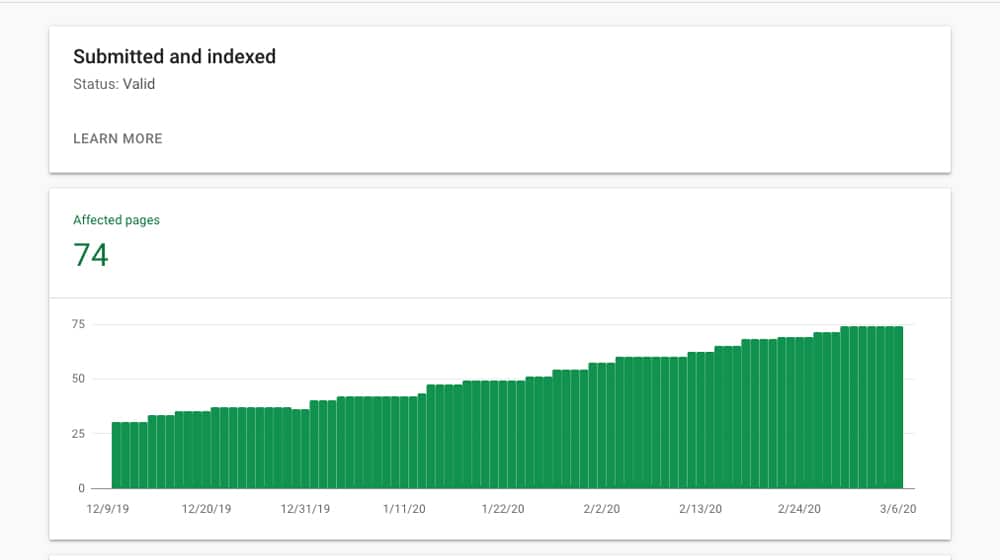

You should have a pretty good idea of the number of pages your site should have indexed. Your blog posts, category pages, homepage, landing pages, store pages, and so on. Even if you have a really old blog, you can estimate based on how old it is, how often you posted to it, and so on.

One semi-common error I've seen, and even experienced myself, is when a technical issue causes a ton of pages to be indexed that shouldn't be. Yoast SEO had a problem where system pages that should have been hidden were visible. I've seen issues with store systems that would generate extra unnecessary pages when an item sold out. Sometimes WordPress can be configured to create a new page with details for every image uploaded to the site. There are a lot of reasons why there might be extraneous pages floating around.

If you see far more pages indexed than there should be, you can start looking for the technical root cause. Often, it's as simple as changing a setting in your system somewhere and waiting for Google to pick it up. Other times, you'll need to more manually add no-index tags to the pages that shouldn't be visible.

Step 3: Perform a Content Audit

The next step is to start a content audit. In this case, we're mostly looking at blog posts, though you can audit product pages, landing pages, and system pages too if you want. In some cases, your product pages may be considered "thin" content, because there's no real content on them, or "copied" content, if you just copied product descriptions from a manufacturer.

If you were ever wondering why sites like Amazon have huge product descriptions, every possible tech spec, dozens of reviews and testimonials, and all the rest all on one page, that's why. It's to make the pages longer, more valuable, and less likely to be thin or copied.

So how do you do a content audit? It's actually pretty simple, though it's time-consuming.

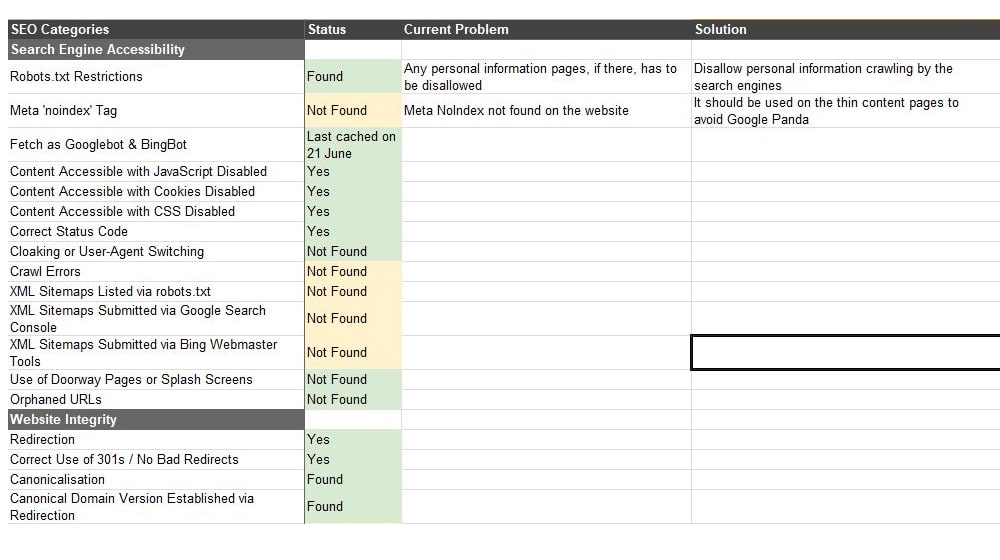

First, you want to scrape a list of all of the pages on your site. The easiest way to do this is with a search crawler. Something like Screaming Frog works very well here. Set it to download the page title, URL, and other relevant information about your blog posts and export it to a spreadsheet you can work with.

Next, you want to rank your content. There are a bunch of different metrics you can use to rank each page, so pick the ones you care about the most. Here are some options:

- The search volume of the main keyword the article targets. You can get search volume from any number of keyword tracker tools out there, though you may have to manually specify what the keyword is for each post you want to check.

- The traffic the page has received over the last 1/3/6/12 months. Picking a time frame that works best for you is important here. You might have some highly valuable seasonal content that only gets traffic 2-3 months of the year, so a 12-month cycle can be good.

- Similarities to other existing articles. I've done this before: running a site over a few years old, it's easy to write about the same topic 2-3 times and never realize it. If they're not sufficiently distinct from one another, they may be cannibalizing each other's SEO value.

- Bounce rates. You can find bounce rates in your Google Analytics reports. Pages with unusually high bounce rates might be flagged for having some kind of issue.

- Average time on page. This is important when compared to bounce rates.

- Number of backlinks, and quality of backlinks. In a lot of cases, I'll hesitate to remove a post that has any backlinks at all, though it's possible that the backlinks pointing to a page are low quality or spam (like those Chinese aggregators, spam copiers, and other sites) and removing the page wouldn't hurt.

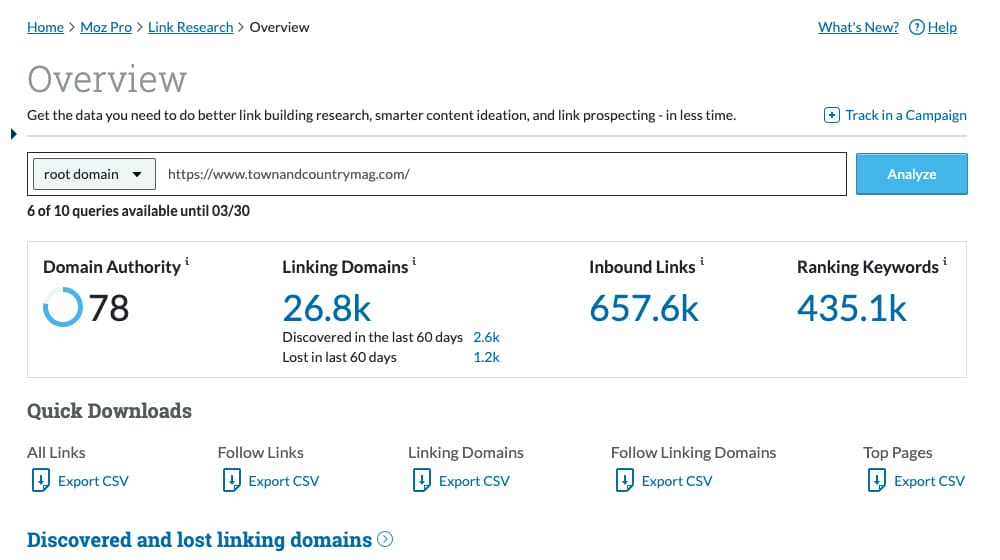

- Technical ranks. You can use systems like Moz's OSE to pull page-specific authority metrics for each page.

- Sniff test. This one can't be done with a tool, you have to just read the articles. Look for cases where the topic of the title isn't addressed in the content, or if the content just feels bad to read, or it's low quality and leaves you annoyed at having read it. Flag those pages.

I like to personally categorize content into three pools. The first pool is great content. The stuff that's evergreen, the stuff still getting traffic, the stuff with good backlinks, and so on. This is all content you want to keep around and use as a model or baseline for future content.

The second pool is mid-range content. Every site is going to have the bulk of its content in this category, and the exact boundaries between this and the other two categories are largely down to personal preference. It's the bulk of the bell curve and it's serving a purpose, but it's not GREAT content, merely decent content.

The third pool is questionable content. This is the stuff that fails the sniff test, that has zero traffic, no good backlinks, and is otherwise possibly holding you back. This is the pool we're mostly going to be working with for the rest of this article.

Step 4: Decide What to Do

At this point, you have a list of content that, according to Google's algorithm, is likely hurting your site. The content is thin, or low relevance, or isn't ranking, isn't getting you any traffic, has no worthwhile backlinks and is in general just an anchor around your neck.

So what do you do?

You have to go through the posts one by one and decide what to do with them. With that in mind, I've put together a series of questions to help you decide.

Does the content have any data, links, or comments you want to save? Old engagement is still engagement, and old links are still links. If the content has some lingering value, such that it's at the top end of the third pool but is still just short and thin enough that it's not great, you can do something about it.

For this kind of content, I generally recommend that you go through a rewrite process. I actually wrote a whole post about doing exactly that, which you can read here.

Do you have multiple pieces of content on more or less the same subject? Rather than deleting these, you can redirect and merge them. Even if the individual pieces of content aren't ranking well and aren't pulling in traffic, a newer resource with the data and accumulated coverage of the old posts, plus updated data, can be a great new post. Merge and update the content into something new and that meets modern content standards. Then redirect the old posts to the new one.

Does the content fail to have any redeeming qualities? A lot of content has something going for it. Maybe it has a couple of good (if not old) backlinks. Maybe it had a lot of good engagement back in the day. Maybe it covers a really interesting topic that's relevant to your brand, even though it's old coverage so it gets no traffic.

Some content lacks any of that. This is most often true of content published before 2011, but any content published can fall into this category, even recent content. If it's just poor quality, if it doesn't meet modern standards, if you hired a freelancer and they copied content from another source, or whatever, the content simply isn't worth keeping.

In these cases, you want to remove the content, but you want to make sure Google knows you removed it. A server status 410 Gone should do nicely.

Once you've performed your content audit and removed the worst content, you need to get to work improving older content, merging content as necessary, and producing new content to fill new roles. Ideally, you'll be able to watch your site rankings improving by the day.

August 26, 2020

This is very educating. I am wondering what to do with my old content since I really don't want to remove it, even though they could be a bit better quality-wise. A few of them have some good links and shares, so I might take your advice and redirect/merge them.

However, my question is, if I do that, would the social engagement and comments from 2 different posts still remain?

August 30, 2020

Hey Nancy! Well, to merge two posts, you have two options: Option #1 is to redirect both of them to a third / brand new post, and Option #2 to choose one of those two posts to rewrite and redirect the other to it.

I would pick whichever post has the most engagement as the post to keep, and redirect the other posts to it. You won't keep the comments on the other posts that you're redirecting, since they'll no longer exist. You might be able to pull some magic in the WordPress database if you can locate the comment and change the post ID value in PHPMyAdmin - if you have some patience and time, you could probably figure it out. Back up your database beforehand just in-case you accidentally make a critical error.

As far as social shares, those are tied to individual URLs, so you're going to lose those on the post(s) that you decide to redirect. There's no way around it.

It's still very worthwhile to find posts that used to perform well in the past and completely rewrite them, or to find several posts that are underperforming and merge them into a larger updated piece (especially if they have engagement, links, and shares).

Good luck!

September 10, 2020

Hi James, I am already on step 3. When deleting posts, it would not have an effect if I bulk delete it or it’s better to do it one at a time?

September 12, 2020

Hey Guzman - I would definitely tackle them one at a time since there are so many metrics you'll absolutely want to check before you ever delete any posts.

Total traffic in the past few months, total backlinks reported on a reputable scraper like Ahrefs Pro, whether or not it has blog comments and social shares, etc. Then you have to make notes on whether or not to redirect it to a similar post, merge that content with another similar post that is flagged as low quality to try to create a larger higher-quality article, or delete it entirely. Doing this in bulk isn't very feasible and you could very easily make mistakes, so do them one at a time and take your time with it. Good luck!

February 06, 2022

I haven't thought of this before, but this seems like a good idea. Our site is definitely due for auditing. Thanks for sharing.

February 10, 2022

Hey Simon! My pleasure, I'm glad this was useful to you.

March 03, 2024

my website too have a lot of poor content, i will delete it one by one. Thanks

March 07, 2024

Hey Abrar, happy to help! Good luck on your content