Is Keyword Density Dead or Is It Still Relevant for SEO?

If you've followed this blog for a while, you've probably seen me mention that keyword density is something I don't care about. Keyword density and, to a certain extent, specific keyword usage is dead or near-dead.

What is Keyword Density?

In case you don't know all about it, keyword density is the frequency at which your keyword appears in your text. It's tricky to calculate because no one ever came up with a specific proven metric. Was it per thousand words? Per hundred? Ask ten SEOs and you'll get ten different answers.

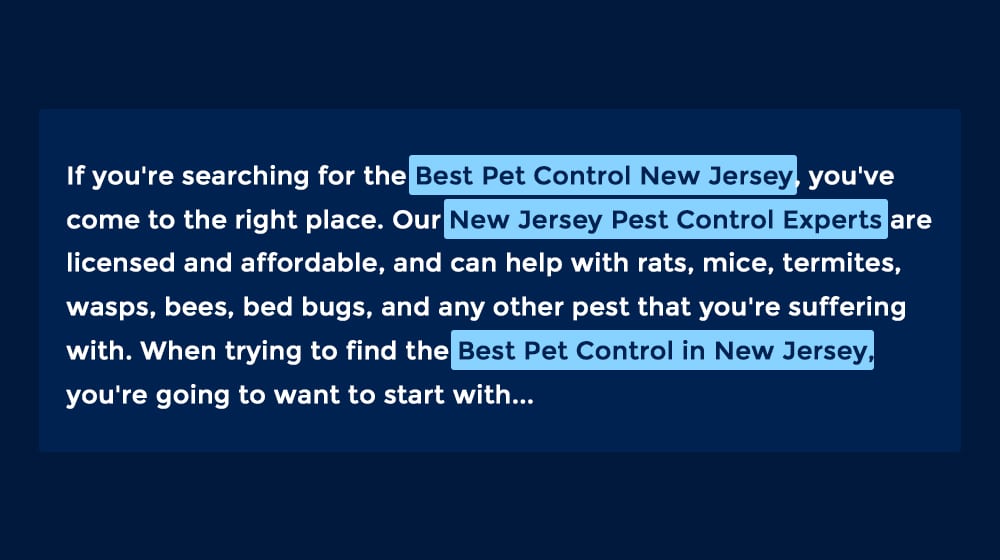

I did some work for some content mills for a while, and many of them had keyword requirement fields (and still do to this day). It was very common to see a 1,000-word article with three different five-word long-tail keywords, each that needed to be used five times minimum. Imagine writing a blog post 1,000 words long, and having to include:

- Best Pest Control New Jersey

- New Jersey Pest Control Experts

- Top Pest Control Strategies NJ

That's including each of these keywords 5 times in each article. That's 75 of your 1,000 words dedicated to these same phrases over and over - and that's a good example! Those are some semi-reasonable local long-tail keywords, and 1,000 words is a decent amount of leeway. I've seen projects where the client wanted that much keyword density in a 500-word post. They wanted nearly one-fifth of the post to be keywords!

Pop quiz: which of those would have done better; the 500-word post or the 1,000-word post?

Answer: the 1,000-word post, but only because it's longer. Chances are pretty good that both of them would barely rank at all, especially today. Pre-Panda, the world was a very different place, but even then, this kind of content wasn't even that great.

Now, the general formula for keyword density is the number of times your keyword appears divided by your total word count, multiplied by 100 for percentage. So, as an example, a keyword used five times in 1,000 words would be a 0.5% keyword density. Most people back then seemed to shoot for something like a 2-5% keyword density, which meant mentioning a long-tail keyword in nearly every paragraph.

How useful do you think that content was? Not very. There's a reason you don't see content like that anymore. Users hate it, and Google hates what users hate, so Google decided that "too high" keyword density is a signal of poor quality content.

At least, that's the theory. The fact is, Google probably never cared about keyword density in the first place. Density, after all, doesn't take into consideration the distribution of the keyword throughout the content, or relevance of the keyword to the rest of the content. If density was all that mattered, you could write your keyword enough times to achieve that density in the first (or last) paragraph, and would still rank fine. Google has always been more sophisticated than that. They consider synonyms and know enough about these topics to determine if you're covering the right points, or if you're writing a bunch of nonsense - and they do all of this within milliseconds.

Why Keyword Density is Dead

In my opinion, I think keyword density died long before RankBrain and neural networks and all of these modern algorithms came into play. It died back in 2011, around when Panda came out. It's all part of that ever-touted, consistently nebulous concept of "quality." Even then, Google probably didn't care specifically about density, but they did pay attention to the frequency of various keywords throughout content, and how many other keywords in that content were related to it.

When you read a piece of content, you build awareness of it. You read the title, the subtitle, and the intro paragraph, and you know what it's roughly about. Everything else is in the content itself. It's rationally explaining points, staying on topic, and the content of those points. It's history, it's data, it's reasoning. It's how you take a position, how you defend that position, and how you convince others of your stance, all while supporting your content with links and sources.

You don't need a keyword every 150 words to remind you what the content is about. Some people have bad short term memory, but not that bad.

And, neither does Google.

By parsing your text and drawing the relationship between words, phrases, sentences, and paragraphs, Google can determine what the topic of a piece of content is.

But, even before they could do that, they could still associate word groups. If you write a blog post about pest control, they can identify that pest control is the primary keyword because everything else you write uses words related to it. It talks about specific pests, and control measures, and the pros and cons of them, and where you can find them. It all centers around that one core concept, and Google's algorithm is very good at identifying that concept.

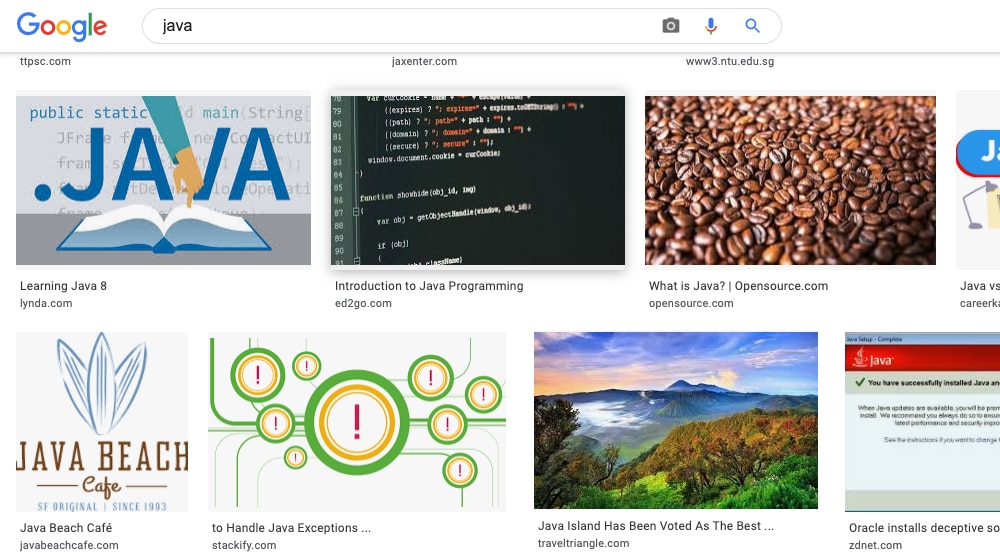

Take the word "Java" for example. If you write a blog post with Java as the keyword, what is it about? Is it about the programming language? Is it about the island? Is it about coffee? Someone just searching for Java could be searching for all three, but a single piece of content won't be about more than one of them.

Google can identify it, though, because they can associate other words you use as part of the content you write. If you talk about SDKs and developers, you're writing about programming. If you're talking about Indonesia, you're talking about the island. If you're writing about cups and beans, it's almost certainly going to be about coffee. Google can make that association. This is just a basic example of how their algorithm works, but it's obviously a lot more complex than that.

Using your keyword a couple of times was enough to associate the content with the keyword – back when that still mattered – but anything more was excessive. It annoyed users, it wasn't necessary for the search engine, and it made writing awkward and difficult.

I believe Google killed off caring about keyword density around the same time they started to care about quality. Panda muddied the waters a lot because it changed the way content was written on the web. The removal of keyword density was a part of that.

The Proof in the Pudding

I've occasionally met a marketer who still believes in the old ways, like attaining some specific keyword density will work like a magic spell and trick the algorithm into ranking their (usually mediocre) content better than the competition.

To them, I say, read the room.

Google's John Mueller, in 2014:

"Keyword density, in general, is something I wouldn't focus on. Make sure your content is written in a natural way. Humans, when they view your website, they're not going to count the number of occurrences of each individual word. And search engines have kind of moved on from there over the years as well."

"That's just not the way it works. So if you think that you can just say, okay, I'm gonna have 14.5% keyword density, or 7%, or 77%, and that will mean I'll rank number one, that's really not the case. That's not the way that search engines work. […] And then what you'll find is that if you repeat stuff over and over again, then you are in danger of getting into keyword stuffing."

"If search engines derived document relevancy based heavily on keyword density, then all you would have to do is repeat your target term over and over to get pages to rank. Search engines are not that dumb."

If two executives from Google are telling you that keyword density isn't relevant and doesn't work, it's something you should take seriously. This may have worked over a decade ago when search quality was nowhere near where it is today, and unfortunately, a lot of those old techniques are still floating around today.

The Change That Killed Keywords

I'm going to say something here that might shock some of you, especially the marketers who have been around for a decade or more.

I think keyword research is (nearly) dead.

Now, hold on. Let me explain what I mean.

Keyword research in the past was all about finding specific variations of a long-tail search phrase that struck the right balance between search volume and competition. Too high of a search volume and the competition was likely too fierce to ever rank for it. Too low and it's not worth ranking for. Trying to find a keyword with just the right variation of just the right phrase was the constant quest all marketers were pursuing.

Then Google changed.

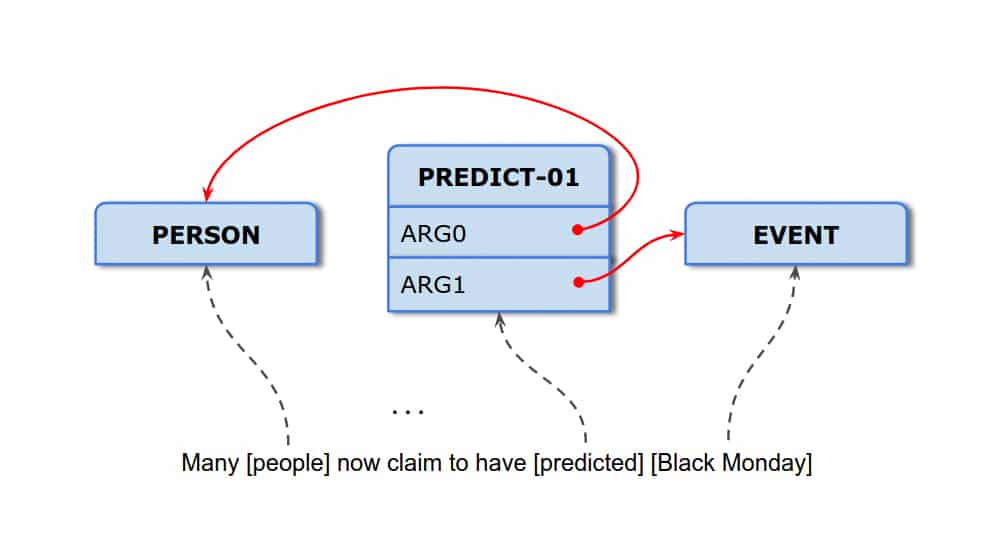

Google introduced something called Natural Language Understanding. NLU is something that has been an ongoing project for software engineers and AI developers for a long time. It's getting a computer to understand the nuances and semantic differences in language.

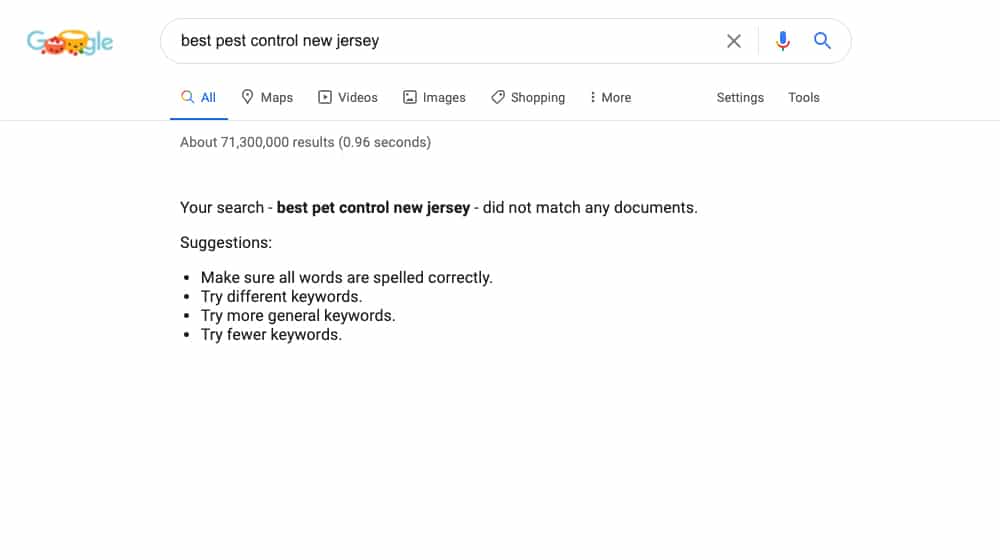

What's the difference between content focused on "pest control in new jersey" and content focused on "new jersey pest control"? Nothing. Understanding that, while the two keywords and search phrases are different, the intent and the content are the same, which is surprisingly difficult for a computer.

Google has been throwing a lot of money and a lot of computing power at this problem, and every year they make more iterations to their algorithm to make it better understand language. For example, in the past, you might have a natural language parser interpret "work from home" and "homework" as the same concept when we clearly know they're different. Google's algorithm can tell the difference today.

Back in 2017, Google introduced SLING, a language parser using a neural network to interpret and learn the language. It's all incredibly cool, and it's also technical enough that it's a bit difficult for most people to understand. Feel free to dig a bit deeper on this if you're interested in learning more.

The result for SEO is pretty stark. If I were to write two blog posts, one targeting "pest control in new jersey" and one targeting "new jersey pest control", those two posts would cannibalize each other. They would show up for the same queries and spread search value and clicks between them. They are, functionally, the exact same keyword.

What about all of the detailed analysis of long-tail variations of keywords to achieve just the right amount of search volume? It's meaningless now because Google can and will present results that don't use the exact match keyword at all. You've probably seen that yourself, though you may not have recognized it at the time.

Much like how specific meta descriptions are on their way out, I think specific keyword research is on its way out as well.

I'm not the only one to feel this way either. Neil Patel published a post by Alp Mimaroglu about the same point. Keywords are less and less important with every passing year.

Long Live Keyword Research

Now, I phrased things the way I did for the shock value. I don't actually think keywords or keyword research are dead. I think caring about specific keywords is dead, and keyword density with it.

I still use keyword research and research tools, and so should you. The point, however, has changed. It's no longer worth the time to come up with specific long-tail keyphrases with minor variations between them. No, instead, what you're researching is topics.

Choosing a topic involves keyword research, so you can determine things like search volume and competition. Keyword research tools that spin out keyword variations are still helpful because they can find adjacent and related topics for you to explore in other posts. Rather than writing a post about new jersey pest control and another about pest control in new jersey, you might write one about rodent control and one about termite control. Similar topics, but not the same topic that is phrased in different ways.

There's one caveat to all of this that is worth mentioning: specific keyword research is relevant for paid ads. Google ads still care about keywords, including match type and keyword list variations, so that's important research to do. And, you have to match your site's overall topic to the kinds of keywords you're advertising, otherwise, you'll likely end up with a lower relevance score and worse ads. For organic SEO, though? Well.

What to Do with Keywords in Modern SEO

If keyword density has been dead for so long that the body has mummified, and if keywords are more or less dying, what do you do for SEO?

Modern SEO still cares about keywords, and I don't see that truly changing any time soon. You don't need to care about specific long-tail keyphrases, but you do need to care about the core top-level keywords or short-tail keywords that inform the core of your topic. Backlinks, for example, still consider the anchor text of how that link was used contextually to help search engines understand what that page is about. This influences rankings (when it's not being exploited).

Use those keywords in your title, meta title, meta description, and first paragraph of your content. You can try to fit them in when natural throughout the post, but don't worry about it too much. For example, the post you're reading right now is about keyword density, but I spent large portions of it without even using the phrase. Is that hurting me? Absolutely not. I didn't even think about it, and it would be pretty difficult to write an organic post on the subject without mentioning those words.

Perform some keyword research, but don't bother keeping extensive spreadsheets of every long-tail variation you can find with metrics associated with each. These don't matter much. Instead, prune those lists down to individual topics. Use those topics to guide specific posts, with the focus of the post determined by the topic. You may even be able to fit several of those similar keywords into a single larger guide or resources and break it down into smaller pieces.

From there, simply write your content. Try not to go too wildly off the rails, because if you do, your topic is no longer accurate and you won't rank for it. It's fine if that happens – just change the keyword – but it's better to remain focused on the topic at hand.

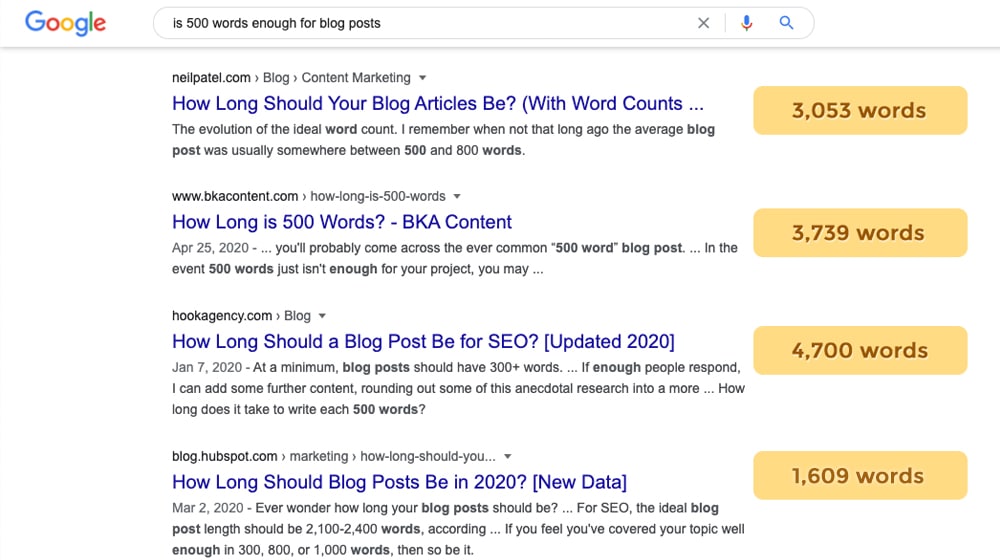

Make sure your content is high quality. I like 2,000+ words in length, as I find it gives me the most room to delve into a topic without running into a wall or having to fluff it up.

And as for keyword density? It still has one use: identifying SEOs who you absolutely shouldn't trust if they tell you it matters.

Comments