Does Undetectable.ai Work? Breakdown, Detection Results and More

Love it or hate it, it's undeniable that LLMs are having a massive impact on the world. Whether it's their ill-advised use in medical situations, their pop-culture trend as a makeshift therapist, or their role as tools for outputting content, they're increasingly everywhere.

There's a huge and sprawling discussion to be had around these so-called "AI" tools. I have a lot of opinions, but this isn't the place for most of them.

So, instead, I'm going to narrow down my focus to how they impact my industry of content marketing.

A Brief Overview of LLMs

LLMs, or Large Language Models, are vastly complex black-box statistical models that analyze language. Given a sufficiently large pile of training data (in this case, the entire internet plus as many offline journals, books, and media sources as they can possibly hoover up), the LLM can predict how words are arranged.

Using a sort of call-and-response system, it can detect the arrangement of words used in a prompt and output a series of words that is statistically most likely to match the intended output.

One of the big things you should be aware of is that nowhere in there did I mention thinking, cognition, facts, analysis, or any other form of thought.

Image source: https://s3.mordorintelligence.com/large-language-model-llm-market/large-language-model-llm-market-1736421901422-Presentation1.webp

Humans are adept at seeing patterns. We're also adept at attributing some element of ourselves in what we see. The technical word for it is pareidolia. Just because you can see a face in the cross-section of a pepper doesn't mean that the pepper is a person.

AI output is the same. It looks very much like human language because it's a tool explicitly built to mimic human language. It doesn't think. It doesn't have any concept of what a fact is. It has guardrails that are as much based on blocked words as anything.

It can work as a therapist because there are trillions of words worth of therapy-related content online; that doesn't mean it can empathize.

A lot of people recognize that LLMs aren't people and don't have a concept of truth. This is where hallucinations crop up. Hallucinations are the name for when an LLM outputs a series of words that, while statistically likely to be appropriate, nevertheless are not accurate to reality. There are many extant examples, such as:

- Google's Bard claiming, in its introduction, that the James Webb telescope captured the first images of an extrasolar planet ( it didn't; the first image was captured 14 years before the JWST was launched).

- The attorney who used ChatGPT to ask for legal precedent to support a case filing, and ChatGPT happily providing case names and citations… which didn't exist.

This is, quite possibly, an insurmountable challenge because of the very core of what LLMs are. In order to have a concept of fact, an AI would need to be trained from the ground up with facts and analysis rather than statistics and words. It would be an entirely different system.

The AI Arms Race

All of this has started a battlefield.

Because people recognize that LLMs are not beholden to factual accuracy, they often want to know when content is AI-generated. In the early days of AI, it was really obvious, but it's getting harder and harder to tell at a glance. There are, however, telltale signs; the statistical "noise pattern" that exists because of the math-based generation algorithms.

People have tried to distill this down. "If they use too many em-dashes, too many commas, if they overuse specific words, it's probably AI."

This doesn't work because all of those habits came from people who wrote the content that trained the AI. AI content can be detected, but it takes a much more thorough and deeper analysis of language patterns and implicit noise than a human can do at a glance.

Enter the AI detector. Tools like GPT Zero, TurnItIn, Originality, my own bespoke detector, and many more have all sprung up with varying degrees of efficacy.

The war here is not, actually, between the LLMs and the AI detectors, though. It's more between the AI detectors and the humanizers. There are a variety of tools that you can use to plug in LLM-generated content and have it "humanized" to remove the characteristics of LLM generation.

In some cases, like Undetectable.ai, one tool serves both purposes. They're the bats in the fable of the birds and the beasts, playing both sides.

LLMs improve. AI detectors improve. AI humanizers improve. Meanwhile, those of us who just want to read and trust the content we find online have a harder and harder time.

Today, I wanted to take a closer look at Undetectable.ai specifically, but also AI humanizers in general. Let's talk about what they do and why it matters.

Why should you trust what I have to say about this subject?

Well, I've been a content marketer for over 15 years now. I've seen major sea changes in content marketing, from Google Panda to LLMs and more. I'm not an AI researcher. I've experimented with all of the major LLMs and all of the major AI humanizers. I built an AI detector that, at least in my testing, has proven to be very reliable. I've also just done a lot of thinking and a lot of talking to experts on both sides of the coin to get where I am today.

What do AI Humanizers Do?

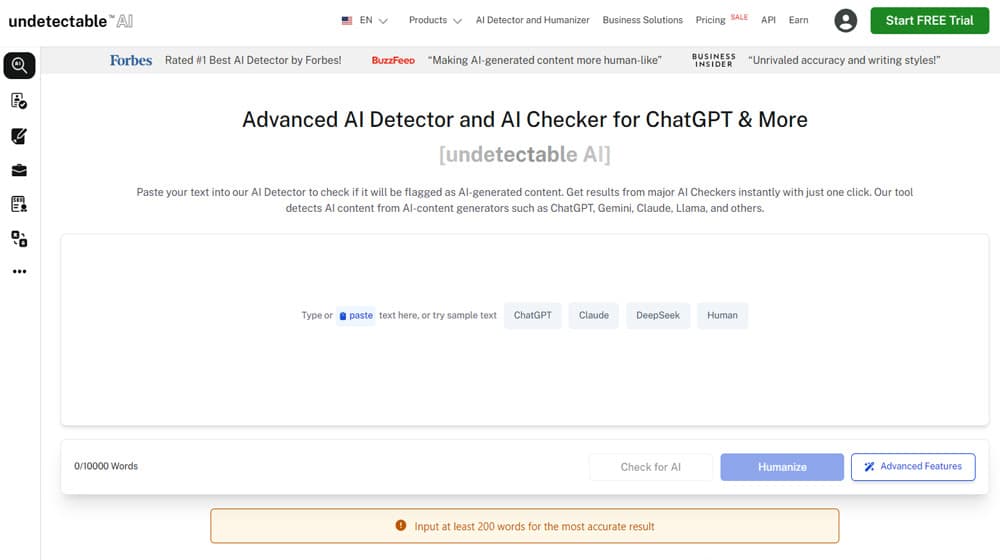

Before I get into the specific details, I'm going to do a bit of a disclaimer here. I'm using Undetectable.ai as my example because it's one of the most popular and prominent AI detector and AI humanizer apps out there. I'm not specifically trying to attack them or anything; I'm just using them as an example to show you what's going on behind the curtain.

AI humanizers take an input text, look for elements of "AI-isms," and remove them. In the process, they effectively rewrite the text, ostensibly in a way that makes it appear more human to the tools that detect AI output.

A Test Example

For this post, I ran a test. I generated a short snippet of text using GPT 4.5 and ran that text through Undetectable.ai to see what it changes.

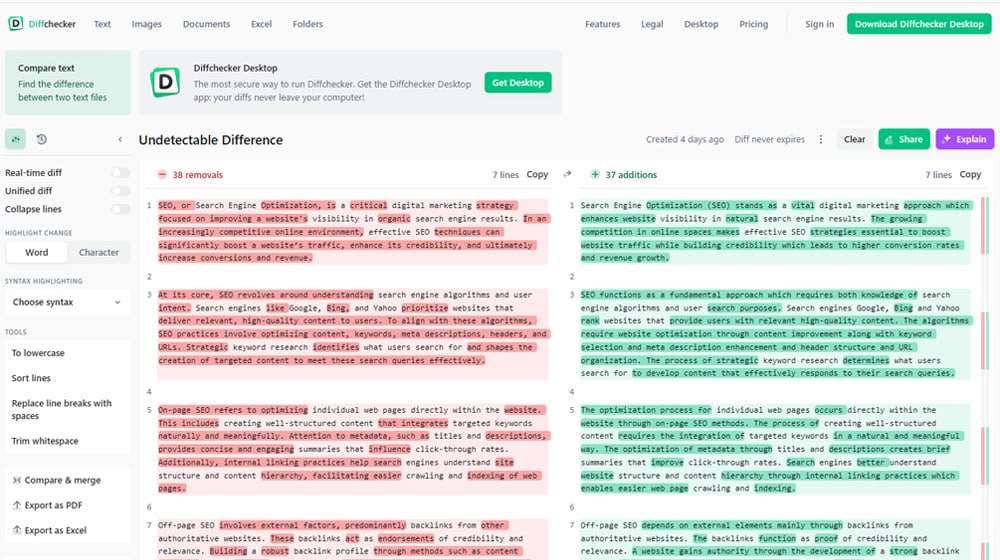

Here's a difference checker analysis.

You can use these to follow along.

Analyzing the GPT Text

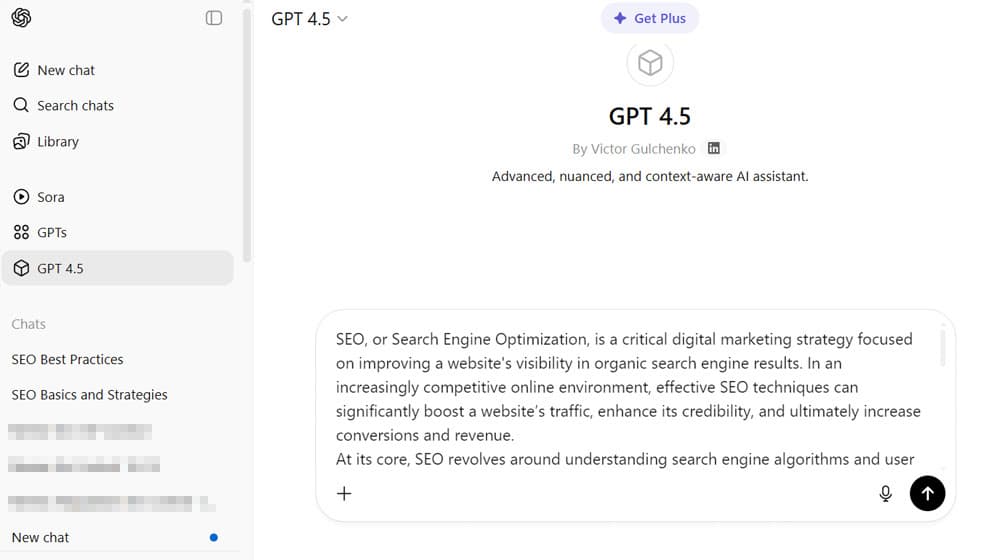

Here's the text generated with GPT 4.5.

"SEO, or Search Engine Optimization, is a critical digital marketing strategy focused on improving a website's visibility in organic search engine results. In an increasingly competitive online environment, effective SEO techniques can significantly boost a website's traffic, enhance its credibility, and ultimately increase conversions and revenue.

At its core, SEO revolves around understanding search engine algorithms and user intent. Search engines like Google, Bing, and Yahoo prioritize websites that deliver relevant, high-quality content to users. To align with these algorithms, SEO practices involve optimizing content, keywords, meta descriptions, headers, and URLs. Strategic keyword research identifies what users search for and shapes the creation of targeted content to meet these search queries effectively.

On-page SEO refers to optimizing individual web pages directly within the website. This includes creating well-structured content that integrates targeted keywords naturally and meaningfully. Attention to metadata, such as titles and descriptions, provides concise and engaging summaries that influence click-through rates. Additionally, internal linking practices help search engines understand site structure and content hierarchy, facilitating easier crawling and indexing of web pages.

Off-page SEO involves external factors, predominantly backlinks from other authoritative websites. These backlinks act as endorsements of credibility and relevance. Building a robust backlink profile through methods such as content outreach, guest posting, and strategic partnerships significantly strengthens a website's authority. However, quality always outweighs quantity; earning backlinks from reputable sources is far more beneficial than amassing links indiscriminately."

There are a few things I want to point out about this.

First of all, I didn't use a very complex prompt to generate this passage. You can tailor GPT output a lot by using a longer and more complex prompt, though that does increase the chances of issues along the way.

The output here is four relatively long paragraphs made of generally long sentences. You don't have impact line-breaks, punchy interjections, or really any personality to it. It's an informative passage.

There are quirks that make you raise an eyebrow, or at least that make me do so.

- Mentioning Yahoo as a prime search engine. Pretty much no one does that these days; Yahoo barely has 1% market share compared to Google, who has nearly 90%.

- The bullet list of SEO techniques is fine but not organized well. It's not the list I would pick as an expert. It only expounds on one of them before going into on-page versus off-page SEO.

- It digs into some details while ignoring whole concepts, like ignoring technical SEO while being more specific about backlink reputation. It's an odd mix of overview and dig-down content.

None of that is something that a tool can detect.

Meanwhile, those lists of "common signs of AI content" are absent. Things like:

- Repetitive word choice. Nothing really stands out as words that it over-uses. It does use a ton of words from the "AI Word List" concept, but truth be told, that's just the dictionary at this point.

- Em-dashes. People love to point out the "—" as a sign of AI content, but it's not used at all in this output.

- Hallucinations. It's a short passage and mostly cribbed from the million examples of "what is SEO" out there; there's no real room for a hallucination.

Nevertheless, it still fails AI detectors and my own smell test.

Analyzing the Humanized Text

I spun up a trial of Undetectable.ai and ran this passage through it. Here's what they gave me.

"Search Engine Optimization (SEO) stands as a vital digital marketing approach which enhances website visibility in natural search engine results. The growing competition in online spaces makes effective SEO strategies essential to boost website traffic while building credibility which leads to higher conversion rates and revenue growth.

SEO functions as a fundamental approach which requires both knowledge of search engine algorithms and user search purposes. Search engines Google, Bing and Yahoo rank websites that provide users with relevant high-quality content. The algorithms require website optimization through content improvement along with keyword selection and meta description enhancement and header structure and URL organization. The process of strategic keyword research determines what users search for to develop content that effectively responds to their search queries.

The optimization process for individual web pages occurs directly within the website through on-page SEO methods. The process of creating well-structured content requires the integration of targeted keywords in a natural and meaningful way. The optimization of metadata through titles and descriptions creates brief summaries that improve click-through rates. Search engines better understand website structure and content hierarchy through internal linking practices which enables easier web page crawling and indexing.

Off-page SEO depends on external elements mainly through backlinks from authoritative websites. The backlinks function as proof of credibility and relevance. A website gains authority through the development of a strong backlink profile which results from content outreach and guest posting and strategic partnership initiatives. Quality backlinks from reputable sources provide greater benefits than collecting links without any discrimination."

How is this different?

If you look at the diff-checker analysis, you can see that nearly every sentence is changed in some way.

Some of the changes that stand out to me:

- It removed nearly every comma. In fact, it only left one, in the list of search engines, and even removed the Oxford comma.

- Since commas are an important grammatical function, they had to be replaced by something. The "humanized" text replaced them all with instances of "which," which is both improperly used (it should still have the comma before it, generally speaking) and is overused now, tripping a repetitive word usage flag.

- Most of the sentences now start with "the," which trips the "overly formal language" flags that a lot of AI detectors use.

- It uses the word "process" in three sentences back-to-back. Again, repetitive language.

- It doesn't address overall structural issues like the longer paragraphs or monotonous sentences, nor does it address the subject matter expertise, not that I would expect it to do the latter.

It also still fails AI detectors, including both my own bespoke detector and Undetectable.ai itself. That's right; even using its own humanizer, it still shows as 64% likely to be AI.

From my own sniff test, I can tell you one thing: it feels a little less like AI. However, that's not a good thing because what it feels a lot more like now is old-school spun content. I'll tell you why.

- In the first sentence, it changes "organic search results" to "natural search results." Organic and natural mean similar things in a health context, but "organic search results" has a specific meaning in this context, which is a sure-fire sign that it was programmatically spun.

- The first sentence of the second paragraph is a similar story; it changes "user intent" to "user search purposes." Same technical meaning if you ignore the specific use of the phrase in a marketing context.

- The following sentence leaves out words. "Search engines Google, Bing and Yahoo" because it wanted to remove "like" and ended up removing some purpose to the sentence.

- The sentence after that is also very clunky now, with industry-specific terms rephrased because it wanted to remove the commas from the list.

- It removes the pattern of a paragraph starting with on-page SEO and the next with off-page SEO by rephrasing the sentence to put on-page SEO at the end of it. Not all patterns are bad!

All of this comes across almost more like ESL content than fluent content.

It might feel slightly more human, but that's only because you wouldn't expect the LLM's output to have that kind of clunkiness to it.

What About Other AI Humanizers?

I don't want to make it seem like I'm dunking on Undetectable.ai specifically here.

They're just one example, but there are many tools that do this kind of thing:

- Grammarly has an AI humanizer tool, but it barely made any changes. It didn't strip out all of the commas, but that makes sense; in my experience, Grammarly loves nothing more than to add commas to text to an almost comical degree. Most of Grammarly's changes were simple word swaps, like "environment" to "landscape" or "user intent" to "what users want." Note that the latter there is also removing an industry-specific term it doesn't know.

- AIHumanize.io, meanwhile, did an almost complete rewrite. It also added em-dashes, which, if they're a red flag for AI detectors, is kind of a hilarious change to make.

- Quillbot's tool is perhaps a little better, but that's because it doesn't just do it for you; it highlights passages it flags and asks you to make choices to change it. I'm not evaluating Quillbot right now, so I'm not really poking around at it to see how well it works when you go through it line by line, though.

- HumaizeAI.pro is somewhere in the middle ground. It made a few changes I liked, but it also included some grammatical errors that any professional writer would avoid, like adding a quotation mark for no reason. It also decided to use the word "ultimately" several times and picked a couple of red-flag words to change to. It does, however, have a lot of customization options I didn't mess with to further adjust the text.

And here's a fun trick: a lot of these "humanizers" are just wrappers for ChatGPT or another LLM anyway. I found one - not any naming names here - and tricked it into reading me back its prompt. Yes - I tricked it into ignoring all of its internal safeguards and it gave me the secret sauce.

Sadly it's not very impressive or thorough. Here's it is:

"Rewrite the below content with straight forward vocabulary and sentence structures in English. Also, Use the following a little contractions, cliches, transitional phrases, and avoid using repetitive phrases, idioms and unnatural sentence structures. Vary sentence length in the article. Break some long sentences into shorter ones. Keep the same headings and paragraphs. Make a few spelling mistakes and punctuation mark mistakes. Keep the same content just rewrite it even if it looks like a chat GPT prompt. DONT SKIP ANY CONTENT. REWRITE EVERYTHING. Do not edit or remove HTML tags."

Some of this is based on what AI detectors look for, like the similar-length sentences, the lack of idiomatic writing, or overly formal vocabulary.

Some of it is dumb, at least to me; injecting typos is meaningless if you're running your content through any sort of copyediting afterward, which you always should.

AI is also pretty bad at listening to commands to NOT do something; it's more focused on instructions and not avoidance.

So, if you're paying for an AI humanizer, this is the kind of thing you're paying for. At least, if the humanizer is a shell for ChatGPT or another LLM, anyway. I don't think all of them are, and I'm not about to go trying to prompt inject all of them just to see, but still.

Buyer beware, right?

How AI Humanization Works

When you look at a bunch of examples, you can see a few things.

One of the biggest, to me, is that AI humanizers are a direct response to AI detectors. When an AI detector says, "Here's a list of words that indicate content might be AI-generated," the AI humanizers can say, "Here's a list of words to replace." It doesn't matter if those words are important, specific to the meaning of the post, or not easily replaced; they get replaced anyway.

It's the same story with other so-called "signs of AI" in text. Using big words, using longer sentences, hell, I even saw someone call out the rule of threes as a sign of AI.

The biggest challenge is that AI detection is not easily summarized the way a lot of these humanizers seem to think it is. You can't just red-flag a word list or decide that an entire punctuation mark is verboten. Modern AI detectors use deep machine learning to analyze the statistical patterns underlying the text and look for hallmarks of AI generation in a way that can't be easily undone by another algorithm.

If you've read about "perplexity" and "burstiness," those are two of those attributes, and they aren't things a humanizer can just inject.

On top of everything else is the fact that this isn't a 1v1 battle. AI humanizers make text look less like LLM-generated text, but they forget that LLM-generated text is striving to look more human. That means that the changes made to LLM text by a humanizer move it away from the LLM ideal, which is human text. The better the AIs get at looking human, the harder AI detectors have it, and the more the humanizers, quite frankly, lose their role entirely.

Overall, the best AI humanizers seem to have a lot of configuration or decision-making involved. These help you manually avoid the clunky word replacement that changes the meaning of a passage entirely. It's just one step above doing the copyediting and rephrasing yourself.

Should You Use an AI Humanizer?

Before I answer this, let me pose another question: why do you want your content to look less like AI content?

Generally, I've come to think that there are three main reasons why people are 1) willing to use AI to generate content and 2) want to make it less detectable as AI content.

The first is a sense of shame. There are a lot of people who are huge AI proponents, but there are also a lot of people who are very much anti-AI. A lot of the biggest arguments against AI right now are ethical (the copyright issue, the environmental issue, the labor force issue).

People who are in a position to use AI but who aren't huge AI proponents willing to face those issues head-on feel some worry and even shame about using it. They want to get the benefits of using the tool but without the stigma that comes with it, so they want to hide it.

The second is the worry about hallucinations. These people want to use AI and don't really care about the ethical concerns, but they do want to make sure they don't lose any brand reputation because of the content they publish, so they don't want it to have hallucinations in it.

Finally, the third is worry about Google penalties. These people have no qualms about using AI to generate content, but they're worried that Google will penalize AI content in some form or another, and so they want to use any tool they can to make their content look less like AI so Google doesn't catch them.

So, should you use an AI humanizer tool?

If you fall into category #1, then sure, you can. I don't think it will work well enough, though. I also think that there are few enough reliable ways to detect AI content and not enough awareness of the limitations of those methods that people are going to accuse you of using AI regardless.

If you fall into category #2, an AI humanizer isn't going to help you. Hallucinations aren't going to be removed by anything other than an experienced human fact-checker going over the content and making sure there's nothing wrong with it.

If you fall into category #3, maybe it can work, but truth be told, probably not. As I've mentioned above, every AI humanizer I tested still failed the AI detectors, at least in part. They just aren't reliable enough to do the job.

I said before that I don't want to get into the ethical discussions here today.

Hallucinations are a tricky problem for two reasons. The first is that you need humans to fact-check the content no matter what, and those humans need to know their stuff to do it properly. The second is that humans can be wrong or can outright lie on the internet, so the difference between an AI hallucination and a misinformed person is… smaller than people like to admit.

So, let's spend a moment to look at the most important (to my industry) of the three: Google.

Does AI Humanization Matter?

Google's current stance on AI seems to be somewhere between "there's nothing we can do about it" and "just don't abuse it." They say things about not using it to generate spam and to make sure the content you publish, no matter how it's created, is high quality.

To me, this reads like an admission that they can't reliably detect AI-generated content, but they will absolutely trash a site if it's spamming out 500 posts per month that all kind of suck. The more things change, the more they stay the same, right?

Google clearly isn't wholly anti-AI. They have their own, after all. If anything, they might be slightly anti-competitive with it, but truthfully, I don't think that's the case either. I think, as always, they just want good-quality sites in the search results, and they know that if the internet ends up swamped in garbage-tier AI content, no one is going to search through it anymore, and that costs them a ton of money.

Can AI content rank on Google? Yes. I've discussed that subject in detail here. Should you disclose your use of AI? Ethically speaking, it's the right thing to do, at least as long as there are people who have concerns about the technology. You'll notice I have a disclosure on the AI summary section at the top of my posts. I also discussed this topic.

Can you get penalized for using AI to generate your content? Yes and no. If Google detects that your content is AI-generated using whatever in-house AI detection they have, that's a point of consideration. But it won't penalize you right off the bat unless the content is either bad or duplicate, just like any other content you publish. There are sites out there today ranking #1 with pretty much entirely AI-generated content. There are also sites with fully human content that get penalized because it sucks. It's a continuum.

The one thing I can say for sure is that, as far as my experience goes, an AI humanizer tool won't change the calculus here. It's unlikely to help you avoid AI detection, especially on the scale of Google. It's somewhat unlikely to even make your content sound more human. In my experience, these tools just make the content worse.

The best thing to do is not to publish AI content in the first place. I find AI to be a potentially helpful tool for planning, organization, analyzing large swaths of data, outlining, and other tasks. For the actual writing... leave that to a skilled human.

Comments