The Ultimate Guide to Adding Disclosures to AI-Written Content

AI is all the rage today, and as a tool, it has the potential to be a very powerful asset in the hands of growth hackers, marketers, and content creators. At the same time, its foundations in theft and its widespread use to spam the internet for quick-buck ad revenue, and the way executives are using it as an excuse to cut thousands of employees, means it's an extremely contentious topic.

One of the biggest discussions surrounding AI, aside from copyright issues, is whether or not it's considered spammy to use it for generating content. On one hand, you have companies like OpenAI allowing pretty much anything, and companies like Jasper providing frameworks you can use to convert a handful of keywords and dashboard settings into wholly-created content.

We've all been waiting with bated breath to see what stance Google will take. Some believe they'll fall on the side of AI since they've been making their own version with Gemini, and they've integrated AI features directly into Google Docs. Others believe it falls squarely against their spam rules, the same ones that catch spun content and other "algorithmically generated content.""

The day finally arrived, and Google issued both guidance and a new algorithm update that promised to clarify the situation.

What do we get? Let's take a look.

General Content Guidelines

First of all, we can take a look at Google's guidelines for general content, the kinds of things you see on blogs like this one.

You can read Google's guidance directly from blog posts in their developers section, like this one and this one.

Google does not outright come out and prohibit the use of generative AI to create content. However, they do clarify that AI generation can fall under their rules against scaled content and algorithmically generated content.

"We've long had a policy against using automation to generate low-quality or unoriginal content at scale with the goal of manipulating search rankings. This policy was originally designed to address instances of content being generated at scale where it was clear that automation was involved.

Today, scaled content creation methods are more sophisticated, and whether the content is created purely through automation isn't always as clear. To better address these techniques, we're strengthening our policy to focus on this abusive behavior — producing content at scale to boost search ranking — whether automation, humans, or a combination are involved. This will allow us to take action on more types of content with little to no value created at scale, like pages that pretend to have answers to popular searches but fail to deliver helpful content."

Basically, they're saying that using AI as an assistant to help you write content isn't against their rules. What is against their rules is using AI to spin up hundreds or thousands of blog posts on a new domain to try to dominate search results. As anyone who has used Google in the last few years knows, there are a ton of sites that are essentially spun content, but since they're created using AI and templates that are unique enough from their sources, they don't get caught and penalized.

Google is trying to reduce the visibility of these sorts of sites since they are usually unhelpful compared to other search results.

Fortunately, they have some resources to help them identify this kind of content. Where we do a search, see a site, and might have a hard time knowing for sure whether it was AI-generated or not, Google can look at habits from their index and see something like "well, this site sprang up out of nowhere with largely-similar content on a niche all published at once" and recognize that it's probably an AI spam site.

A common technique to avoid this was to buy up an expired domain and fill it with AI-generated content. That way, the age of the domain didn't readily give away the fact that it was created out of whole cloth. Google has also decided that this is now considered a spam technique.

"Occasionally, expired domains are purchased and repurposed with the primary intention of boosting search ranking of low-quality or unoriginal content. This can mislead users into thinking the new content is part of the older site, which may not be the case. Expired domains that are purchased and repurposed with the intention of boosting the search ranking of low-quality content are now considered spam."

All in all, these are some good rule changes aimed at undercutting the most common abuses of AI.

Do these rules go far enough? In my opinion, no, not yet; the problems with AI are only going to get worse as the AIs get better, and it's going to put a lot of people out of work. Worse, since AI can't synthesize unique thoughts, come to data-driven conclusions, or perform research, there's going to be an increasing divide between the AI-generated low-tier slop and the people performing actual, unique research. Unfortunately, since things like unique research cost money and require resources, this is all going to make it even harder to break into an industry if you aren't already well-endowed with funding and other resources.

Legitimate and High-Quality Use of AI

While Google does not prohibit the use of AI to generate content, they urge extreme caution when using it, in a few ways.

The first is that they're further pushing E-E-A-T in their algorithms. E-E-A-T is their Experience, Expertise, Authoritativeness, and Trustworthiness metric. Essentially, this is how they are trying to go beyond "Is the content accurate?" and into "Can you trust the person delivering this information?" AI, since it cannot discern fact from fiction or hallucination, and since it is not a person with critical thinking skills, does not have expertise, experience, trust, or authority.

E-E-A-T manifests in a lot of small signals across a site. Author bylines, fact-checking, consistent information, consistent tone and style, brand reputation building, and citations; all of these relatively small factors combine to make a source more trustworthy. The idea is that if you are presented with two sites, both with similar content, and one of them has citations, fact-checking, and an author bio from someone you know, while the other has none of those things, it's clear which one is more trustworthy.

"But we use AI to help generate content, a human reviews and edits it!" This is the rallying cry of AI users, and it's somewhat true. However, three things go wrong here.

- Attaching a human name to a post and saying anything AI-created was reviewed is easy and can just as easily be a lie.

- Humans can be lazy and, in cases where they aren't questioning everything the AI says, can pass hallucinations without scrutiny.

- If the AI is just used for filler, wouldn't the content be better off without the filler anyway?

Basically, Google wants you to stake your reputation on your content, and if you're using AI to generate it – especially if you have little oversight over it – you should be prepared for the consequences.

Google encourages (but does not yet require) that AI-generated content have some sort of disclosure. This can take two forms. The first is in an accurate author byline; instead of claiming an individual wrote the post, say the individual used AI to write the post and reviewed it. (If this makes the byline less valuable, well, yeah, that's a consequence you need to consider when using a tool that eliminates the value of the labor.)

The other thing Google says you can do is provide background information about your use of AI. This can be a system page similar to a privacy policy or about us page, and should include how you use AI, how AI is fact checked, and where AI is not used.

Google has no rules or qualms against you using AI to, for example:

- Generate image descriptions for alt text (as long as they're accurate)

- Generate meta descriptions using keywords to describe a site (which Google barely uses anyway)

- Generate outlines or topic ideation documents that a human takes and creates

The problem largely comes from using AI to create content from whole cloth.

Specific Rules for Narrow Content

Google is not a company to forsake nuance. As we all know from previous targeted updates for things like YMYL (Your Money and Your Life) topics, they are more than willing to put stricter rules on specific kinds of content when those kinds of content can do more harm, either to people in general or to their company in the fallout.

Fortunately, as this year is an election year, Google has already issued instructions for AI's use in political content.

Issued all the way back in September, Google's disclosure requirements are thus:

"…require that all verified election advertisers in regions where verification is required must prominently disclose when their ads contain synthetic content that inauthentically depicts real or realistic-looking people or events. This disclosure must be clear and conspicuous, and must be placed in a location where it is likely to be noticed by users. This policy will apply to image, video, and audio content."

Since AI is good enough to fool people now, even if it falls apart under scrutiny, we all know that the general population isn't going to give it that scrutiny. So, to prevent political agents from using AI to irreparably damage society, they're requiring disclosure.

Unfortunately, there are a few issues with this policy.

First, it only applies to ads run through Google Ads. Other advertising networks will have to implement their own policies, and you can bet that a lot of them won't be as quick on the draw.

Second, it only applies to "verified election advertisers." As we all see all the time, all sorts of advertisers slip through these kinds of verification cracks; they don't care that their ads are pulled as long as they run for a bit and get some exposure.

Third, Google also says this:

"Ads that contain synthetic content altered or generated in such a way that is inconsequential to the claims made in the ad will be exempt from these disclosure requirements."

Now, their examples are things like using AI to resize images to fit ad dimensions or AI-based red-eye removal or background alterations that don't impact the context of the ads. Maybe this will be fine. Personally, I think any flexibility in the rules at all is a gray area people will abuse to ride the line, and that can cause both a huge moderation burden and a problem for people who see the ads in the gray area.

Amy Klobuchar issued a statement that is hard to disagree with: making it a voluntary commitment from political advertisers to tell the truth doesn't go far enough.

AI and You(Tube)

Google owns YouTube, so it's not much of a surprise that similar rules are going into effect with YouTube content. They've issued their own statements on the use of AI in YouTube. Essentially, they're requiring that creators identify when they've used AI to alter or generate content in a video, and that information will be used for a disclosure on the video.

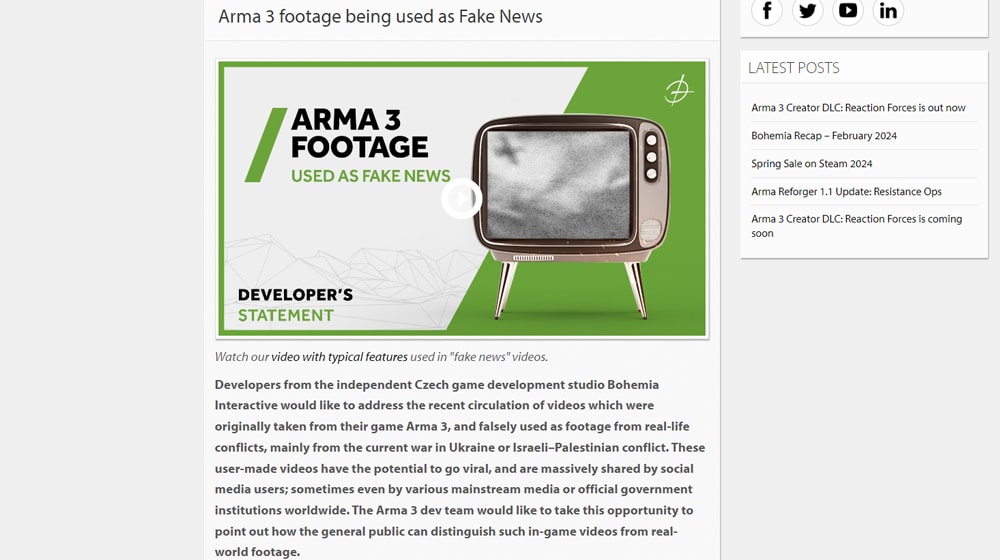

Google/YouTube doesn't particularly care if you're using generative AI to write the script for a fiction story or something inconsequential. They're mostly focused on videos that push a narrative on a contentious issue, like healthcare, politics, or ongoing conflicts. We already see this a lot, with semi-realistic video game footage being misattributed to wars like the ones in Ukraine and Gaza; AI only makes it harder to identify the source.

Violators can have their content removed, be banned from the YouTube partner program, and potentially have their content entirely removed. Will YouTube be proactive enough to stop problems before they start, or will this be a case of "the video had 100 million views so the damage is already done"? That remains to be seen.

This is also a disclosure added to the video description, and it's all too easy to ignore those. Personally, I think the disclosure should be added to the video itself, possibly as a pre-roll notification similar to pre-roll ads, but for now, Google probably considers that too intrusive and too fast. We'll see if they change their tune later.

What Should You Do?

All of this brings us to one final question: what should you do?

The easiest option is to dramatically limit your use of generative AI to "background" and mechanical tasks. Using AI to generate topic ideas? Fine, though there are better options available. Using AI to fill out Schema code or generate meta descriptions or image alt text? Fine, as long as it's accurate. Using AI to take key points you want to make and generate an outline around them for you to write? Go nuts.

However, using AI to generate full content is where things get tricky. If you're going to do this, I recommend three layers of protection.

- Do a very thorough check to make sure every fact the AI generates is accurate. You can ruin your credibility if you let an AI hallucination slip through.

- Add a disclosure to the page, sort of like your usual affiliate link disclosure, that AI was used in the generation of the content. If possible, describe how.

- Add a page to your site on the same tier as a privacy policy that explains your use of AI. Doing so may lose you some followers from the people who are vehemently against AI, but that's just the risk you take in using a contentious technology.

That's for general content, like for a business blog, a food blog, a gaming site, or what have you.

If you're operating in a more contentious sphere, like geopolitical news, election news, or anything of that nature, I really recommend not using AI at all. You want people to trust you, so even using AI for something minor that people notice can be enough to throw everything you post into question.

At the end of the day, this is a rapidly changing situation, as AI is seeing increased regulatory pressure in the EU and US, the technology continues to be developed, copyright challenges are seeing progress, and more. I would expect Google to issue at least one more set of rules in the coming months, and that alone can dramatically change everything I've written above. Just be careful out there, folks.

Comments